Pyarrow

import random

import string

import numpy as np

import pandas as pd

import pyarrow as pa

import pyarrow.csv as csv

from datetime import datetime

def gen_random_string(length: int = 32) -> str:

return ''.join(random.choices(

string.ascii_uppercase + string.digits, k=length)

)

dt = pd.date_range(

start=datetime(2000, 1, 1),

end=datetime(2021, 1, 1),

freq='min'

)

np.random.seed = 42

df_size = len(dt)

print(f'Dataset length: {df_size}')

df = pd.DataFrame({

'date': dt,

'a': np.random.rand(df_size),

'b': np.random.rand(df_size),

'c': np.random.rand(df_size),

'd': np.random.rand(df_size),

'e': np.random.rand(df_size),

'str1': [gen_random_string() for x in range(df_size)],

'str2': [gen_random_string() for x in range(df_size)]

})

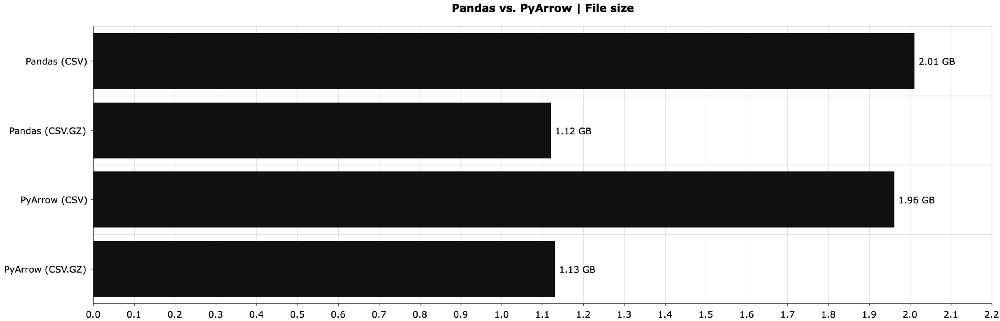

df.to_csv('csv_pandas.csv.gz', index=False, compression='gzip')

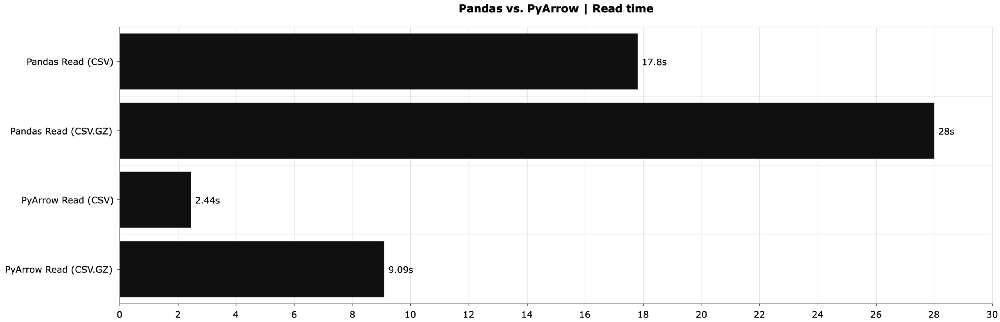

df1 = pd.read_csv('csv_pandas.csv')

df_pa = df.copy()

df_pa['date'] = df_pa['date'].values.astype(np.int64) // 10 ** 9

df_pa_table = pa.Table.from_pandas(df_pa)

csv.write_csv(df_pa_table, 'csv_pyarrow.csv')

with pa.CompressedOutputStream('csv_pyarrow.csv.gz', 'gzip') as out:

csv.write_csv(df_pa_table, out)

df_pa_1 = csv.read_csv('csv_pyarrow.csv')

df_pa_2 = csv.read_csv('csv_pyarrow.csv.gz')

df_pa_1 = df_pa_1.to_pandas()